Project Description

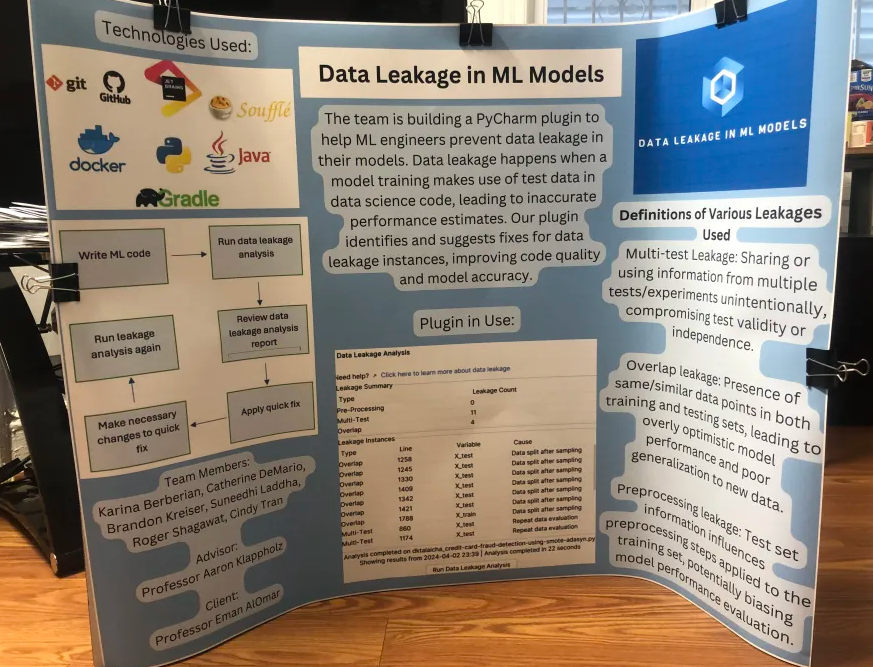

Code quality is of paramount importance in all types of software development settings. Our project seeks to enable machine learning (ML) engineers to write better code by helping them find and fix instances of Data Leakage in their models. Data Leakage is a problem where an ML model is unintentionally trained on data that is not present in the training dataset. As a result, the model effectively "memorizes" the data it trains on, leading to an overly optimistic estimate of model performance and an inability to make generalized predictions. To avoid introducing Data Leakage into their code, ML developers must carefully separate their data into training, evaluation, and test sets. Training data should be used to train the model, evaluation data should be used to confirm the accuracy of a model repeatedly, and test data should be used only once to determine the accuracy of a production-ready model. According to the paper Data Leakage in Notebooks: Static Detection and Better Processes, many model designers do not effectively separate their testing data from their evaluation and training data. We are developing a plugin for the PyCharm IDE that identifies instances of data leakage in ML code and provides suggestions on how to remove the leakage.

Source: Yang, C., Brower-Sinning, R. A., Lewis, G., & Kästner, C. (2022). Data leakage in notebooks: Static detection and Better Processes. Proceedings of the 37th IEEE/ACM International Conference on Automated Software Engineering. https://doi.org/10.1145/3551349.3556918

Team Members (from left to right)

Suneedhi Laddha, Roger Shagawat, Brandon Kreiser, Catherine DeMario, Cindy Tran, Karina Berberian